As we move through 2026, the "pilot phase" of enterprise AI has officially ended. Organizations are no longer just experimenting with chatbots; they are deploying Agentic AI—autonomous systems that navigate cloud environments, execute financial transactions, and manage sensitive data workflows.

However, this shift has exposed a critical gap. Traditional Identity Governance and Administration (IGA) tools, built for human speeds and static organizational roles, are struggling to keep up with agents that operate at machine speed with ephemeral contexts.

Building on the Strategic Blueprint for runtime authorization, organizations now need a way to translate high-level governance into enforced infrastructure policy.

To bridge this gap, security leaders are turning to Reva as an automated control plane. But how does this fit into the frameworks you’re hearing about from the board, like the CSA's MAESTRO and Gartner’s AI TRiSM?

This guide maps the practical capabilities of Reva to these industry standards, transforming abstract governance into enforced, real-time policy.

The Governance Gap: Why Static IAM Fails

Before operationalizing a framework, we must address why existing tools—like your standard Okta or SailPoint implementation—aren't enough for the agentic era.

- Static vs. Ephemeral Context: Legacy IAM relies on stable roles (e.g., "Marketing Manager"). AI agents change roles dynamically. A "Research Agent" might need read-access to a database one second and write-access to a vector store the next.

- The Identity Explosion: Agentic workflows generate thousands of machine identities. Traditional processes designed for quarterly human access reviews cannot scale to govern millions of sub-second machine interactions.

- The Policy-Execution Gap: Standard tools stop at the "Login." AI security requires Runtime Authorization—the ability to say "No" to a specific tool call or data access request after the agent is already authenticated.

Operationalizing AI TRiSM with Reva

Gartner’s AI TRiSM (Trust, Risk, and Security Management) is the gold standard for AI governance, but implementing it manually is impossible at scale. Reva automates the "Security" and "Risk" pillars of TRiSM by turning high-level requirements into executable code.

1. From "Trust" to "Verification"

The TRiSM framework demands that AI models act within organizational values. Instead of simply "trusting" a model's system prompt, Reva enforces policies at the infrastructure level. If an agent attempts an action that violates a rule—such as an unauthorized GDPR data export—Reva blocks the action regardless of the model's "intent." This is a core component of Just-in-Time (JIT) Trust, a shift recently highlighted in the Software Analyst report on the future of AI trust, where Reva is recognized for enabling dynamic, temporary access.

2. Continuous Risk Monitoring

TRiSM requires continuous monitoring to mitigate adversarial attacks like prompt injection. Reva doesn't just check access at the start of a session; it evaluates policy continuously during the agent's execution loop. This "circuit breaker" logic ensures that if an agent drifts into an unsafe state, its authority is clipped instantly.

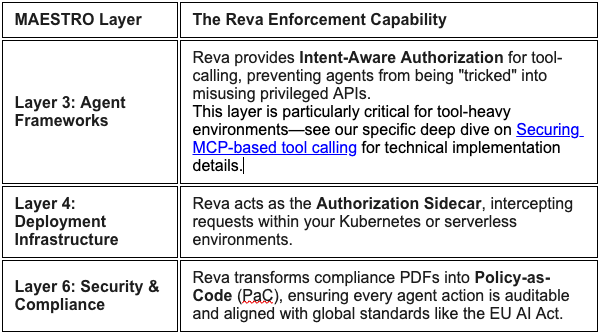

Mapping Reva to the MAESTRO Framework

The Cloud Security Alliance's MAESTRO framework (Multi-Agent Environment, Security, Threat, Risk, and Outcome) identifies where threats exist across seven layers of the AI stack. Reva provides the Controls to mitigate those threats.

The Power of Policy-as-Code (PaC)

In 2026, Policy-as-Code has become a technical necessity. By translating human-readable guidelines into executable rules (using industry-standard languages like Cedar or Rego), organizations can finally close the gap between the compliance office and the production environment.

Real-World Success: The "Refund Bot" Circuit Breaker

Consider a retail platform using an AI agent for customer support. A common risk is a "jailbreak" prompt that convinces the bot to issue a massive, unauthorized refund. By using Reva’s PaC engine, the company sets a hard rule: deny if refund_amount > $500. Even if the LLM is perfectly convinced the refund is valid, the Reva enforcement layer blocks the transaction at the API level.

This "Policy Decoupling" allows security teams to update rules instantly across every agent in the company without requiring a single developer to redeploy code.

Conclusion: A Necessary Evolution

Attempting to secure Agentic AI with traditional IAM is like trying to secure a modern cloud network with a physical padlock. It’s the wrong tool for the physics of the problem.

Organizations must move from "admiring the problem" of AI security to actively solving it. By layering Reva’s automated enforcement on top of frameworks like MAESTRO and AI TRiSM, you can stop treating AI as a "black box" and start treating it as a governed, enterprise-scale asset.

The Agentic Security Series:

- Part 3: Mapping reva.ai to MAESTRO and AI TRiSM (Current)