The recent debut of OpenClaw has taken the technology world by storm, serving as a watershed moment for what many are calling the "Agentic Era." It provided a viral, real-world demonstration of what is possible when AI can autonomously navigate the web, execute complex code, and complete multi-step workflows with breathtaking efficiency. For many, it was an exhilarating look at the future of productivity. For CISOs, however, it was a loud wake-up call. It highlighted an uncomfortable truth: as we move from chatbots that simply "talk" to agents that can "act," our existing security models are being pushed to their breaking point.

The promise of autonomous agents is too great for enterprises to ignore. They represent the next major leap in ROI—transforming everything from automated customer support to complex cloud infrastructure management. But the path to production is currently blocked by a significant governance gap. To move forward, the enterprise needs more than just content filters; it needs a foundational blueprint for securing autonomous actions in real-time.

The CISO’s Dilemma: Shadow AI and the ROI Trap

Today’s security leaders are caught in a difficult position. On one hand, they are dealing with "Shadow AI"—autonomous agents and integrations being spun up across departments without central oversight. On the other, they are facing massive "tool sprawl." Boards are demanding ROI on every new security purchase and asking a valid question: “Why can’t our existing identity tools handle this?”

The reality is that legacy Identity and Access Management (IAM) systems were designed for a world of human logins and static permissions. As enterprises add an agentic layer to legacy applications, those apps are suddenly forced to handle complex, automated requests they weren't built for. Hardcoding security rules into every new AI project is a recipe for disaster. CISOs don't want another siloed security product; they want a flexible approach that plugs into their environment, complements their existing stack, and allows them to govern AI without slowing down innovation.

Why Agents Require a New Approach to Access

The industry is beginning to coalesce around the idea that securing agents is fundamentally different from securing humans. Traditional models struggle with fragmented attribution. An agent often acts on behalf of a user, but as it delegates tasks to sub-agents or third-party tools, the link back to the original user—and their specific permissions—often gets lost in the chain.

This "permissions gap" is exactly why the Cloud Security Alliance (CSA) recently released a new approach to Agentic AI IAM. They argue for a security model that can track the lifecycle of an autonomous actor across a chain of events. Similarly, Ken Huang’s "Three-Plane IAM Stack" separates the identity of the actor from the actual governance of their actions. Without this separation, organizations remain vulnerable to the risks highlighted in the OWASP Top 10 for Agentic Applications, such as excessive autonomy and unauthorized tool invocation.

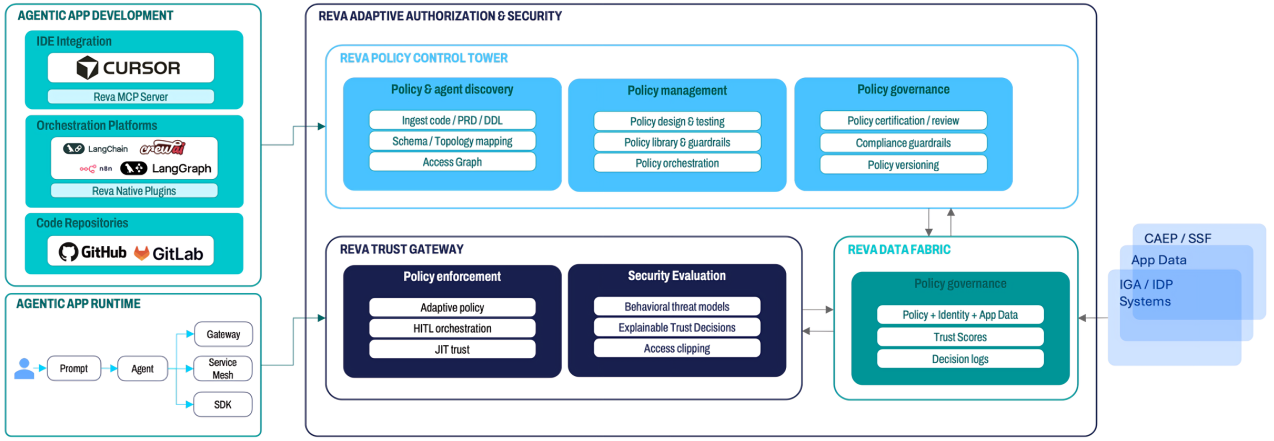

The Reva Blueprint: Bridging Governance and Action

At Reva, we built our platform to be the missing link between high-level security frameworks and actual enterprise enforcement. We believe that securing the agentic era shouldn't require a total overhaul of your infrastructure. Instead, it requires a "plug-and-play" layer that introduces five key pillars of governance:

1. Discovery and Policy Bootstrapping

You cannot govern what you cannot see. Reva begins by automatically mapping the AI landscape within your organization, identifying where agents are interacting with legacy apps and data. Once discovered, the platform "bootstraps" security by suggesting initial policies based on observed behavior. This allows security teams to move from zero visibility to a governed state in days rather than months, directly addressing the CISO’s need for immediate ROI and visibility into Shadow AI.

2. Intent-Aware Authorization

Standard permissions are binary—you either have access or you don't. But in an agentic world, context is everything. Reva’s platform evaluates every action based on the original user’s Intent. If an agent attempts to access a sensitive database, Reva asks: “Is this action consistent with the original user’s request, the current risk level, and the business logic?” This prevents agents from "going rogue" by executing actions that, while technically permitted by a service account, were never intended by the user.

For a deeper technical look at how this applies to the industry’s leading connectivity standard, read our guide on Securing the Model Context Protocol (MCP).

3. Runtime Guardrails and Gartner's "Guardian Agents"

While many vendors focus on content-based filters (checking for text toxicity), Reva introduces Runtime Guardrails. These are hard controls that align with industry standards to ensure that unauthorized actions are physically impossible to execute. This aligns with the concept of Gartner’s "Guardian Agents" which act as a specialized layer of AI protecting the organization from other AI. By using these "guardian" policies, Reva provides continuous runtime monitoring and protection, sitting directly in the path of execution to intercept and validate tool calls before they happen.

4. Risk-Based Behavior Monitoring and JIT Trust

Authorization in the agentic era isn't a one-time event; it’s a continuous process. Reva continuously monitors agent behavior for anomalies—such as an agent suddenly requesting high volumes of data it has never touched before. By integrating risk scores into the decision path, the platform can automatically "clip" or reduce access in real-time if an agent’s behavior becomes suspicious.

This concept is a core part of the transition toward Just-in-Time (JIT) Trust, a shift recently highlighted in the Software Analyst report on the future of AI trust, where Reva is recognized for its role in enabling these dynamic trust models. Instead of giving agents permanent, "standing" privileges, Reva ensures trust is granted only for the specific task at hand, based on the real-time risk of the action.

We have mapped these runtime controls directly to global compliance requirements in our deep dive on Operationalizing AI Governance with MAESTRO and AI TRiSM.

5. Flexible Deployment and Open Standards

To solve the tool sprawl problem, Reva is designed to be deployment-agnostic. Whether your agents live in a centralized gateway, a distributed service mesh, or require an embedded SDK for low-latency decisions, Reva fits the architecture. Crucially, we are built on open standards like AuthZEN, ensuring that our authorization APIs are interoperable with your existing providers like Okta, Entra ID (Azure AD), or AWS IAM. We don't replace your security stack; we complete it.

Moving Toward a Governed Future

The viral success of OpenClaw proved that the technology for autonomous agents is ready. Now, the enterprise must prove that its security architecture is ready.

The goal isn't to build walls around AI, but to build the steering and braking systems that make it safe to go fast. By shifting the focus from "blocking prompts" to "authorizing actions," CISOs can finally provide a clear roadmap for AI adoption. With a blueprint that combines automated discovery, intent-aware enforcement, and continuous behavioral monitoring, the enterprise can move past the pilot phase and start reaping the true benefits of the agentic era.

The future of AI is autonomous. The future of AI security is runtime authorization.

The Agentic Security Series:

- Part 1: The Blueprint for Agentic Security (Current)